Prebuilt Algorithms

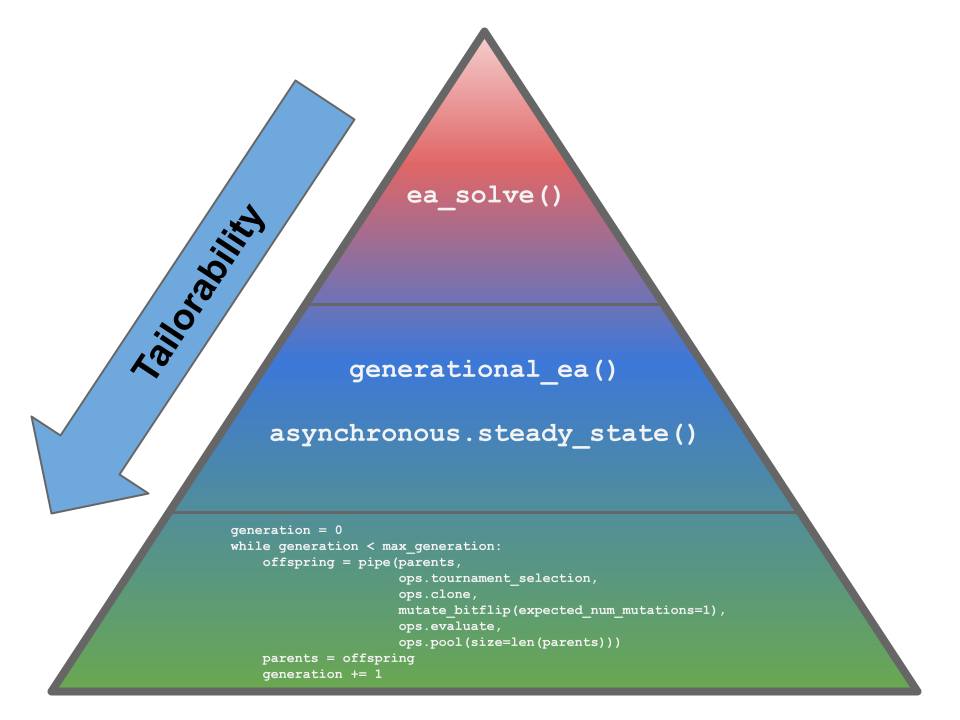

Fig. 9 The three tiers of tailorability for using LEAP. LEAP has three levels of abstraction with gradually increasing order of customization. The top-level has ea_solve() that is ideal for real-valued optimization problems. The mid-level has two functions that allows for some tailoring of the pipeline and representation, generational_ea() and steady_state(). The bottom-most tier provides maximum flexibility and control over an EA’s implementation, and involves the practitioner assembling bespoke EAs using LEAP low-level components, as shown by the code snippet.

Fig. 9 depicts the top-level entry-point, ea_solve(), and has the least customization, but is ideal for real-valued optimization problems. The mid-level allows for more user customization. generational_ea() allows for implementing most traditional evolutionary algorithms, such as genetic algorithms and evolutionary programs. asynchronous.steady_state() is used to implement an asynchronous steady-state EA suitable for HPC platforms as they make the best use of HPC resources. The bottom-most level provides the greatest amount of flexability, and is where users implement their evolutionary algorithms using low-level LEAP components.

ea_solve() and generational_ea() is documented below. asynchronous.steady_state() is documented in Asynchronous fitness evaluations. Information on the bottom-most tier can be found in Implementing Tailored Evolutionary Algorithms with LEAP.

ea_solve()

Example

And example using ea_solve() can be found in examples/simple/simple.py.

generational_ea()

- leap_ec.algorithm.generational_ea(max_generations: int, pop_size: int, problem, representation, pipeline, stop=<function <lambda>>, init_evaluate=<bound method Individual.evaluate_population of <class 'leap_ec.individual.Individual'>>, k_elites: int = 1, start_generation: int = 0, context={'leap': {'distrib': {'non_viable': 0}}})

This function provides an evolutionary algorithm with a generational population model.

When called this initializes and evaluates a population of size pop_size using the init_evaluate function and then pipes it through an operator pipeline (i.e. a list of operators) to obtain offspring. Wash, rinse, repeat.

The algorithm is provided here at the “metaheuristic” level: in order to apply it to a particular problem, you must parameterize it with implementations of its various components. You must decide the population size, how individuals are represented and initialized, the pipeline of reproductive operators, etc. A metaheuristic template of this kind can be used to implement genetic algorithms, genetic programming, certain evolution strategies, and all manner of other (novel) algorithms by passing in appropriate components as parameters.

- Parameters

max_generations (int) – The max number of generations to run the algorithm for. Can pass in float(‘Inf’) to run forever or until the stop condition is reached.

pop_size (int) – Size of the initial population

stop (int) – A function that accepts a population and returns True iff it’s time to stop evolving.

problem (Problem) – the Problem that should be used to evaluate individuals’ fitness

representation – How the problem is represented in individuals

pipeline (list) – a list of operators that are applied (in order) to create the offspring population at each generation

init_evaluate – a function used to evaluate the initial population, before the main pipeline is run. The default of Individual.evaluate_population is suitable for many cases, but you may wish to pass a different operator in for distributed evaluation or other purposes.

k_elites – keep k elites

start_generation – index of the first generation to count from (defaults to 0). You might want to change this, for example, in experiments that involve stopping and restarting an algorithm.

- Returns

the final population

The intent behind this kind of EA interface is to allow the complete configuration of a basic evolutionary algorithm to be defined in a clean and readable way. If you define most of the components in-line when passing them to the named arguments, then the complete configuration of an algorithmic experiment forms one concise code block. Here’s what a basic (mu, lambda)-style EA looks like (that is, an EA that throws away the parents at each generation in favor of their offspring):

>>> from leap_ec import Individual, Representation >>> from leap_ec.algorithm import generational_ea, stop_at_generation >>> from leap_ec.binary_rep.problems import MaxOnes >>> from leap_ec.binary_rep.initializers import create_binary_sequence >>> from leap_ec.binary_rep.ops import mutate_bitflip >>> import leap_ec.ops as ops >>> pop_size = 5 >>> final_pop = generational_ea(max_generations=100, pop_size=pop_size, ... ... problem=MaxOnes(), # Solve a MaxOnes Boolean optimization problem ... ... representation=Representation( ... initialize=create_binary_sequence(length=10) # Initial genomes are random binary sequences ... ), ... ... # The operator pipeline ... pipeline=[ ... ops.tournament_selection, # Select parents via tournament selection ... ops.clone, # Copy them (just to be safe) ... mutate_bitflip(expected_num_mutations=1), # Basic mutation with a 1/L mutation rate ... ops.UniformCrossover(p_swap=0.4), # Crossover with a 40% chance of swapping each gene ... ops.evaluate, # Evaluate fitness ... ops.pool(size=pop_size) # Collect offspring into a new population ... ])

The algorithm runs immediately and returns the final population:

>>> print(*final_pop, sep='\n') Individual<...> ... Individual<...> ... Individual<...> ... ... Individual<...> ...

You can get the best individual by using max (since comparison on individuals is based on the Problem associated with them, this will return the best individaul even on minimization problems):

>>> max(final_pop) Individual<...>...

Example

And example using generational_ea() can be found in examples/simple/int_rep.py.